関連記事

- Part 1: 環境セットアップ

- Part 2: System call Interface

- Part 3: VFS

- Part 4: ext2 (1) write_iter

- Part 5: ext2 (2) write_begin

- Part 6: ext2 (3) get_block

- Part 7: ext2 (4) write_end

- Part 8: writeback (1) work Queue

- Part 9: writeback (2) wb_writeback

- Part 10: writeback (3) writepages

- Part 11: writeback (4) write_inode

- Part 12: block (1) submit_bio

- Part 13: block (2) blk_mq

- Part 14: I/O scheduler (1) mq-deadline

- Part 15: I/O scheduler (2) insert_request

- Part 16: I/O scheduler (3) dispatch_request

- Part 17: block (3) blk_mq_run_work_fn

- Part 18: block (4) block: blk_mq_do_dispatch_sched

- Part 19: MMC (1) initialization

- Part 20: PL181 (1) mmci_probe

- Part 21: MMC (2) mmc_start_host

- Part 22: MMC (3) mmc_rescan

概要

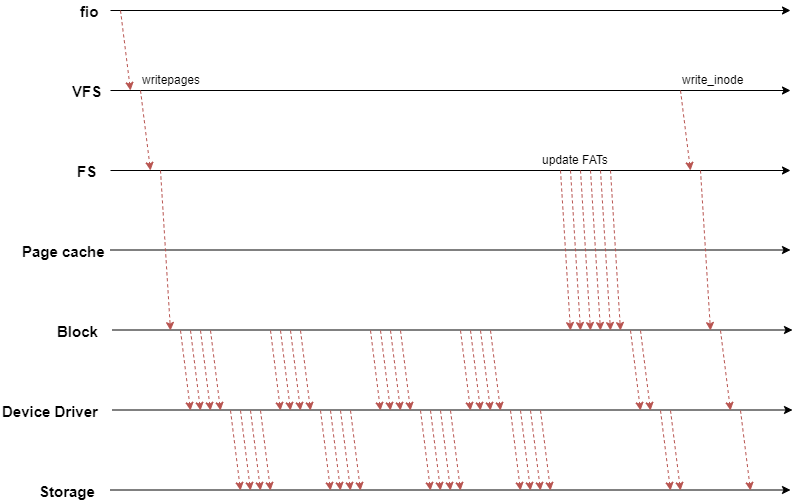

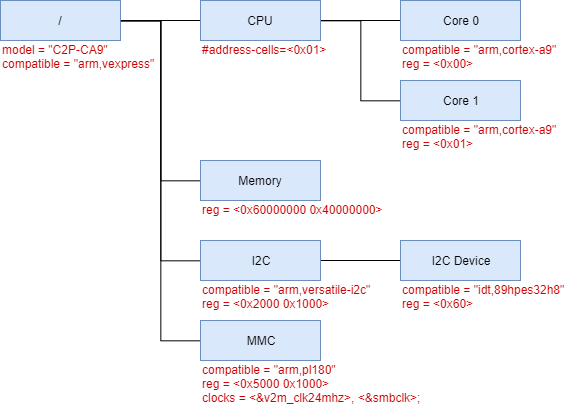

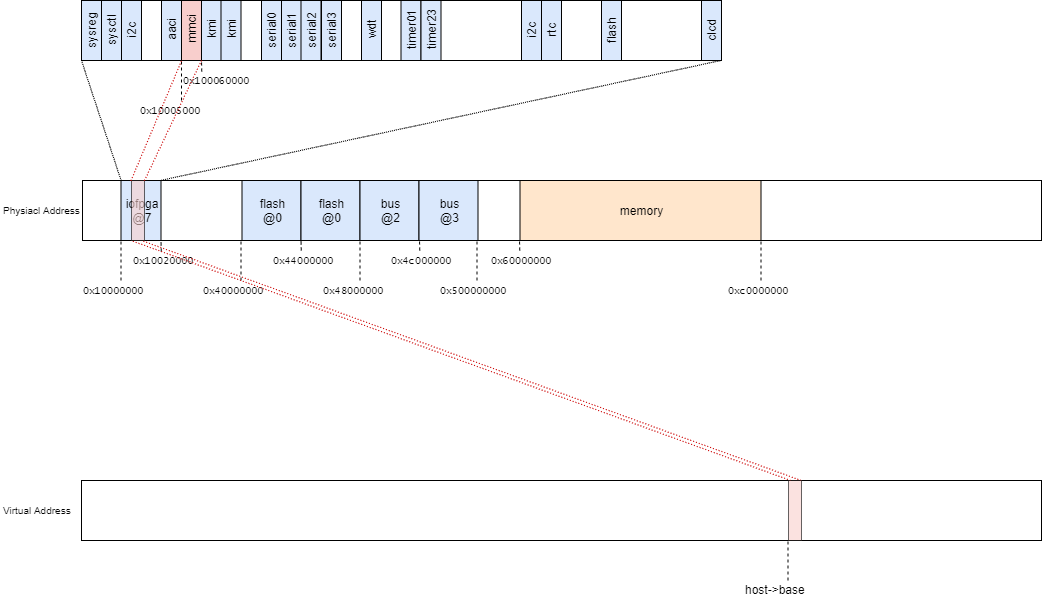

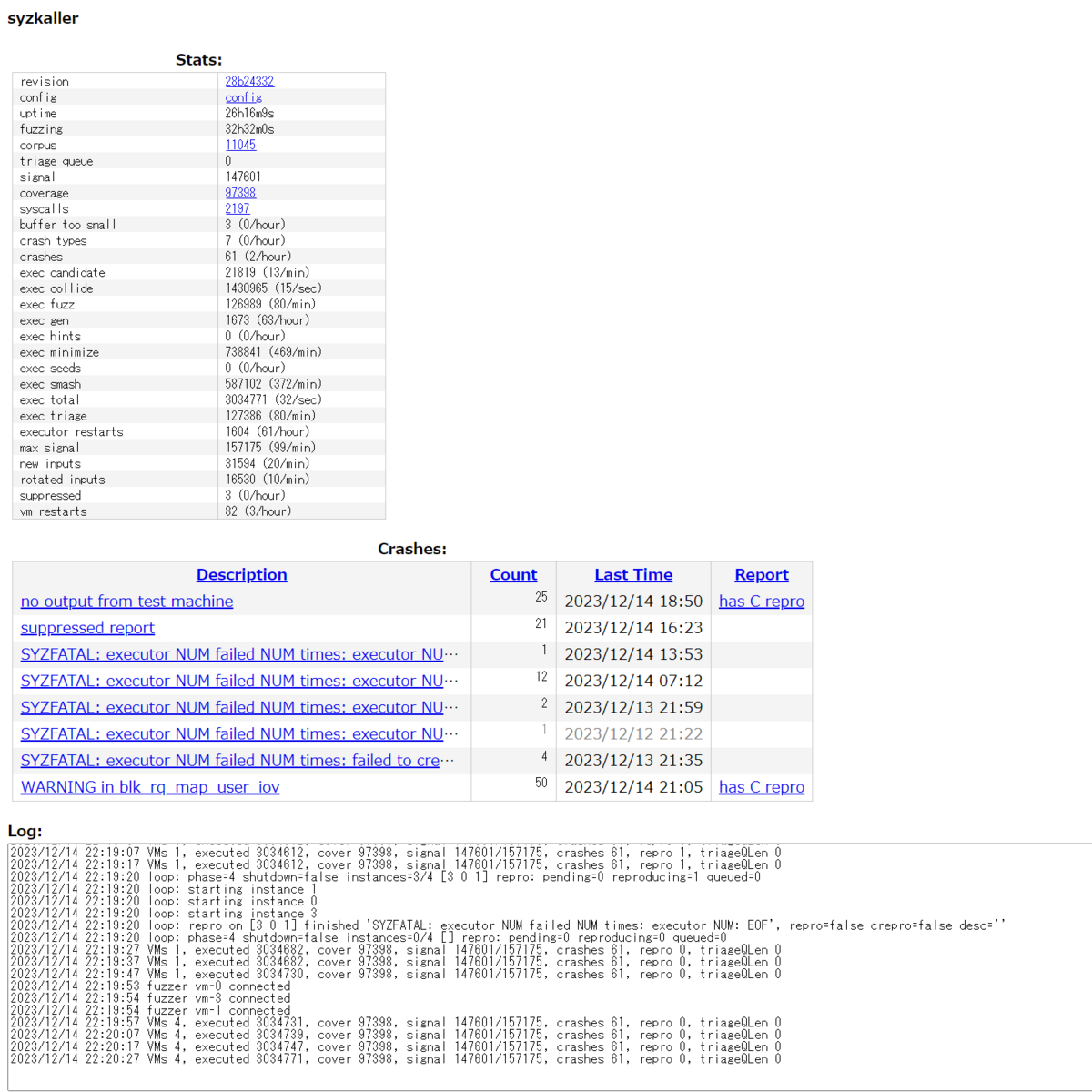

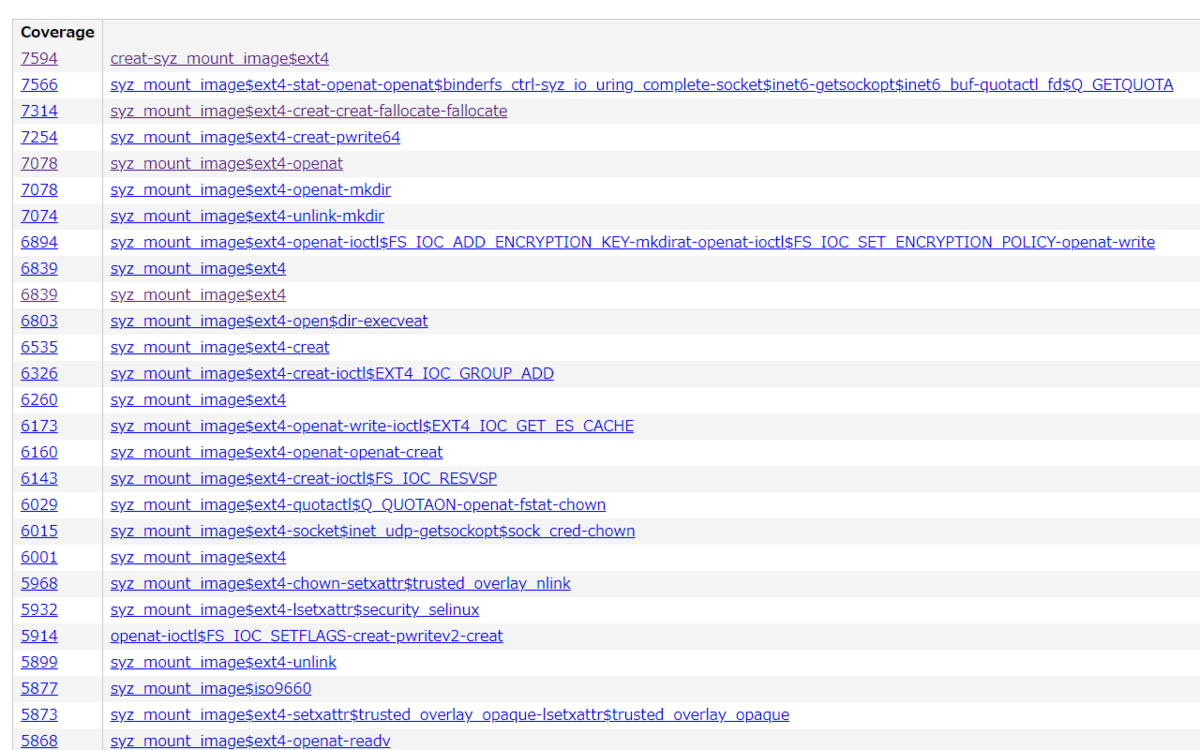

QEMUの vexpress-a9 (arm) で Linux 5.15を起動させながら、ファイル書き込みのカーネル処理を確認していく。

本章では、カードの識別処理の概要を確認した。

はじめに

ユーザプロセスはファイルシステムという機構によって記憶装置上のデータをファイルという形式で書き込み・読み込みすることができる。

本調査では、ユーザプロセスがファイルに書き込み要求を実行したときにLinuxカーネルではどのような処理が実行されるかを読み解いていく。

調査対象や環境などはPart 1: 環境セットアップを参照。

一部の仕様書は非公開となっているため、公開情報からの推測が含まれています。そのため、内容に誤りが含まれている恐れがります。

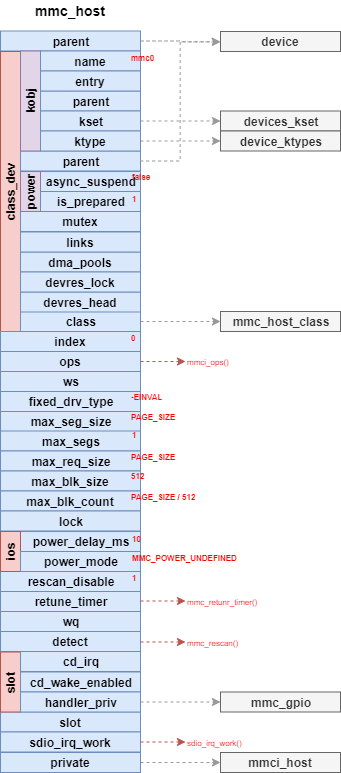

MMC の rescan

mmc_add_host関数でWorkQueueに追加された detect によって、カード検出処理 mmc_rescan関数を呼び出す。

mmc_rescan関数の定義は次のようになっている。

// 2188: void mmc_rescan(struct work_struct *work) { struct mmc_host *host = container_of(work, struct mmc_host, detect.work); int i; if (host->rescan_disable) return; /* If there is a non-removable card registered, only scan once */ if (!mmc_card_is_removable(host) && host->rescan_entered) return; host->rescan_entered = 1; if (host->trigger_card_event && host->ops->card_event) { mmc_claim_host(host); host->ops->card_event(host); mmc_release_host(host); host->trigger_card_event = false; } /* Verify a registered card to be functional, else remove it. */ if (host->bus_ops) host->bus_ops->detect(host); host->detect_change = 0; /* if there still is a card present, stop here */ if (host->bus_ops != NULL) goto out; mmc_claim_host(host); if (mmc_card_is_removable(host) && host->ops->get_cd && host->ops->get_cd(host) == 0) { mmc_power_off(host); mmc_release_host(host); goto out; } /* If an SD express card is present, then leave it as is. */ if (mmc_card_sd_express(host)) { mmc_release_host(host); goto out; } for (i = 0; i < ARRAY_SIZE(freqs); i++) { unsigned int freq = freqs[i]; if (freq > host->f_max) { if (i + 1 < ARRAY_SIZE(freqs)) continue; freq = host->f_max; } if (!mmc_rescan_try_freq(host, max(freq, host->f_min))) break; if (freqs[i] <= host->f_min) break; } mmc_release_host(host); out: if (host->caps & MMC_CAP_NEEDS_POLL) mmc_schedule_delayed_work(&host->detect, HZ); }

MMCバスや関連するコンポーネントが利用不可能の状態であるとき、 rescan_disable によってカード検出ロジックを無効にすることができる。

導入パッチによると、sus/res中のMMC/SDメモリカード抜去による対応となっている。

v5.15時点では、この変数は mmc_start_host関数によって初期化、mmc_stop_host関数によって設定される。

また、リムーバブルメディアでない(mmc_card_is_removable)場合、 カード検出ロジックを何度も実施する必要がないため、host->rescan_entered に一度実施したかどうかを設定する。

今回のSDメモリカードはリムーバルメディア(non-removable)であるため、以降の処理を呼び出すことになる。

ホストコントローラによっては、カード挿入/抜去時に追加のアクションが必要になる。

そのようなホストコントローラは、trigger_card_eventをセットしておくことで card_eventを呼ぶことができる。

MMCIではそのような制御が不要であるため、trigger_card_eventは設定されていない。

以降のmmc_rescan_try_freq関数の処理が正常に終了している場合、 host->bus_opsに SD/SDIO/MMCカード毎の初期化処理が登録される。

その場合には、抜去や再挿入といったカードの変更を検出するために、bus->bus_ops->detectを呼び出す。

この時に設定される detect_change は、カードの抜去を検知できたことを示す。

ここで、SD specification v7.0 から規格化された SD Express Memory Cards の条件分岐が入る。 SD Express Memory Cards では、後方互換性のために従来のシーケンスでの初期化をするが、ここで分岐することになる。

その後、mmc_rescan_try_freq関数によって周波数の設定を試みる。

ただし、カードによっては初期周波数 400KHz が対応できないことがあるため、400KHz、300KHz、200KHz、100KHz の順にリトライする。

周波数の設定

mmc_rescan_try_freq関数の定義は次のようになっている。

// 2035: static int mmc_rescan_try_freq(struct mmc_host *host, unsigned freq) { host->f_init = freq; pr_debug("%s: %s: trying to init card at %u Hz\n", mmc_hostname(host), __func__, host->f_init); mmc_power_up(host, host->ocr_avail); /* * Some eMMCs (with VCCQ always on) may not be reset after power up, so * do a hardware reset if possible. */ mmc_hw_reset_for_init(host); /* * sdio_reset sends CMD52 to reset card. Since we do not know * if the card is being re-initialized, just send it. CMD52 * should be ignored by SD/eMMC cards. * Skip it if we already know that we do not support SDIO commands */ if (!(host->caps2 & MMC_CAP2_NO_SDIO)) sdio_reset(host); mmc_go_idle(host); if (!(host->caps2 & MMC_CAP2_NO_SD)) { if (mmc_send_if_cond_pcie(host, host->ocr_avail)) goto out; if (mmc_card_sd_express(host)) return 0; } /* Order's important: probe SDIO, then SD, then MMC */ if (!(host->caps2 & MMC_CAP2_NO_SDIO)) if (!mmc_attach_sdio(host)) return 0; if (!(host->caps2 & MMC_CAP2_NO_SD)) if (!mmc_attach_sd(host)) return 0; if (!(host->caps2 & MMC_CAP2_NO_MMC)) if (!mmc_attach_mmc(host)) return 0; out: mmc_power_off(host); return -EIO; }

mmc_rescan_try_freq関数の引数で渡された freq を 初期周波数として host->f_initに設定する。

その後、mmc_power_up関数によって POEWER ON 状態に繊維される。

ただし、ここではmmc_start_host関数によって状態となっているため、処理はスキップする。

ここで、eMMC によっては 電源投入後にハードウェアリセットされないものもある。

そういったデバイスのために、mmc_hw_reset_for_init関数によってホストコントローラからハードウェアリセットさせる仕組み (host->ops->hw_reset) が提供されている。

例えば、Raspberry Pi などで使用されている bcm2835 では hw_reset に独自の処理が設定されていたりする。

しかし、今回の環境では該当しないため、mmc_hw_reset_for_init関数では何も処理をせず、すぐに return される。

SDIOの初期化

sdio_reset関数は、SDIOベースのI/Oカードを初期化するための関数である。

// 202: int sdio_reset(struct mmc_host *host) { int ret; u8 abort; /* SDIO Simplified Specification V2.0, 4.4 Reset for SDIO */ ret = mmc_io_rw_direct_host(host, 0, 0, SDIO_CCCR_ABORT, 0, &abort); if (ret) abort = 0x08; else abort |= 0x08; return mmc_io_rw_direct_host(host, 1, 0, SDIO_CCCR_ABORT, abort, NULL); }

初期化には power reset または CMD52 の二通りのやり方が存在する。

sdio_reset関数では、CMD52を発行することで、これを実現する。

ただし、この処理はCMD0より前に発行しなければならない。

実処理は mmc_io_rw_direct_host関数が担っている。

この関数の詳細は省くが、mmc_wait_for_cmd関数によって指定されたコマンドを発行するものである。

sd_reset関数の一つ目のmmc_io_rw_direct_host関数が呼ばれたとき、次のようなデバッグメッセージが確認することができる。

[ 1.205283][ T48] mmc0: starting CMD52 arg 00000c00 flags 00000195

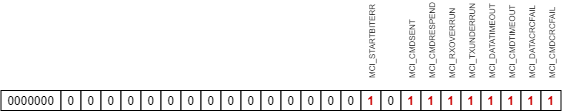

これは、CMD52 によって レジスタ Card Common Control Registers(CCCR) の SDIO_CCCR_ABORTの値を読み込みをしている。

しかし今回は、SDメモリカードであるため ETIMEDOUT となり失敗する。

その後、二つ目のmmc_io_rw_direct_host関数が呼ばれたとき、次のようなデバッグメッセージが確認することができる。

[ 1.207221][ T48] mmc0: starting CMD52 arg 80000c08 flags 00000195

これは、CMD52 によって レジスタ Card Common Control Registers(CCCR) の SDIO_CCCR_ABORTの値に0x08を書き込みしている。

しかし今回は、SDメモリカードであるため ETIMEDOUT となり失敗する。

カードを初期状態に戻す

SD規格ファミリーのカードでは特定の初期化シーケンスが必要となる。

mmc_go_idle関数は、初期化シーケンスに移る前に、カードを初期状態する。

mmc_go_idle関数の定義は次のようになっている。

// 139: int mmc_go_idle(struct mmc_host *host) { int err; struct mmc_command cmd = {}; /* * Non-SPI hosts need to prevent chipselect going active during * GO_IDLE; that would put chips into SPI mode. Remind them of * that in case of hardware that won't pull up DAT3/nCS otherwise. * * SPI hosts ignore ios.chip_select; it's managed according to * rules that must accommodate non-MMC slaves which this layer * won't even know about. */ if (!mmc_host_is_spi(host)) { mmc_set_chip_select(host, MMC_CS_HIGH); mmc_delay(1); } cmd.opcode = MMC_GO_IDLE_STATE; cmd.arg = 0; cmd.flags = MMC_RSP_SPI_R1 | MMC_RSP_NONE | MMC_CMD_BC; err = mmc_wait_for_cmd(host, &cmd, 0); mmc_delay(1); if (!mmc_host_is_spi(host)) { mmc_set_chip_select(host, MMC_CS_DONTCARE); mmc_delay(1); } host->use_spi_crc = 0; return err; }

ここで、SPIモードではない場合には Chip Select(CS)がアクティブになることを防ぐ必要がある。

mmc_go_idle関数が呼ばれたとき、次のようなデバッグメッセージが確認することができる。

[ 1.212842][ T48] mmc0: starting CMD0 arg 00000000 flags 000000c0

これは、CMD0によってソフトウェアリセットをかけている。

カードの識別

ここから、SD規格ファミリーのデバイスを識別する。

デバイスツリーのProperty(no-sdio,no-sd, no-mmc)を設定していない場合には、SD express/SDIO/SD/MMCの順番に確認していく。

SD express

// 2059: if (!(host->caps2 & MMC_CAP2_NO_SD)) { if (mmc_send_if_cond_pcie(host, host->ocr_avail)) goto out; if (mmc_card_sd_express(host)) return 0; }

mmc_send_if_pcie関数は、動作電圧を確認する__mmc_send_if_cond関数のラッパーとなっている。

ここでは、mmc_send_if_pcie関数の詳細は割愛するが、この関数を実行したとき、次のようなデバッグメッセージが確認することができる。

[ 1.232039][ T48] mmc0: starting CMD8 arg 000001aa flags 000002f5

これは、CMD8によって ocr レジスタに 動作電圧 (PCIe) のサポート状況を確認する。

今回使用しているカードは SD express ではないため、mmc_card_sd_express関数で弾かれる。

SDIO

// 2069: if (!(host->caps2 & MMC_CAP2_NO_SDIO)) if (!mmc_attach_sdio(host)) return 0;

SDIOカードの識別はmmc_attach_sdio関数内のmmc_send_io_op_cond関数で実施される。

// 18: int mmc_send_io_op_cond(struct mmc_host *host, u32 ocr, u32 *rocr) { struct mmc_command cmd = {}; int i, err = 0; cmd.opcode = SD_IO_SEND_OP_COND; cmd.arg = ocr; cmd.flags = MMC_RSP_SPI_R4 | MMC_RSP_R4 | MMC_CMD_BCR; for (i = 100; i; i--) { err = mmc_wait_for_cmd(host, &cmd, MMC_CMD_RETRIES); if (err) break; /* if we're just probing, do a single pass */ if (ocr == 0) break; /* otherwise wait until reset completes */ if (mmc_host_is_spi(host)) { /* * Both R1_SPI_IDLE and MMC_CARD_BUSY indicate * an initialized card under SPI, but some cards * (Marvell's) only behave when looking at this * one. */ if (cmd.resp[1] & MMC_CARD_BUSY) break; } else { if (cmd.resp[0] & MMC_CARD_BUSY) break; } err = -ETIMEDOUT; mmc_delay(10); } if (rocr) *rocr = cmd.resp[mmc_host_is_spi(host) ? 1 : 0]; return err; }

mmc_send_io_ops_cond関数が呼ばれたとき、次のようなデバッグメッセージが確認することができる。

[ 1.232680][ T48] mmc0: starting CMD5 arg 00000000 flags 000002e1

SDIOの判定は、CMD5 を発行したときの結果を確認することで確認できる。

今回は、SDメモリカードであるため ETIMEDOUT となり失敗する。

SD

// 2073: if (!(host->caps2 & MMC_CAP2_NO_SD)) if (!mmc_attach_sd(host)) return 0;

SDの識別はmmc_attach_sd関数内のmmc_send_app_op_cond関数で実施される。

// 118: int mmc_send_app_op_cond(struct mmc_host *host, u32 ocr, u32 *rocr) { struct mmc_command cmd = {}; int i, err = 0; cmd.opcode = SD_APP_OP_COND; if (mmc_host_is_spi(host)) cmd.arg = ocr & (1 << 30); /* SPI only defines one bit */ else cmd.arg = ocr; cmd.flags = MMC_RSP_SPI_R1 | MMC_RSP_R3 | MMC_CMD_BCR; for (i = 100; i; i--) { err = mmc_wait_for_app_cmd(host, NULL, &cmd); if (err) break; /* if we're just probing, do a single pass */ if (ocr == 0) break; /* otherwise wait until reset completes */ if (mmc_host_is_spi(host)) { if (!(cmd.resp[0] & R1_SPI_IDLE)) break; } else { if (cmd.resp[0] & MMC_CARD_BUSY) break; } err = -ETIMEDOUT; mmc_delay(10); } if (!i) pr_err("%s: card never left busy state\n", mmc_hostname(host)); if (rocr && !mmc_host_is_spi(host)) *rocr = cmd.resp[0]; return err; }

mmc_send_app_ops_cond関数が呼ばれたとき、次のようなデバッグメッセージが確認することができる。

[ 1.233542][ T48] mmc0: starting CMD55 arg 00000000 flags 000000f5 [ 1.233807][ T48] mmc0: starting CMD41 arg 00000000 flags 000000e1

SDIOの判定は、ACMD41 を発行したときの結果を確認することで確認できる。 ちなみに、CMD55は次のコマンドがアプリケーションコマンドであることを表している。

MMC

// 2077: if (!(host->caps2 & MMC_CAP2_NO_MMC)) if (!mmc_attach_mmc(host)) return 0;

MMCの識別はmmc_attach_mmc関数内のmmc_send_op_cond関数で実施される。

// 176: int mmc_send_op_cond(struct mmc_host *host, u32 ocr, u32 *rocr) { struct mmc_command cmd = {}; int i, err = 0; cmd.opcode = MMC_SEND_OP_COND; cmd.arg = mmc_host_is_spi(host) ? 0 : ocr; cmd.flags = MMC_RSP_SPI_R1 | MMC_RSP_R3 | MMC_CMD_BCR; for (i = 100; i; i--) { err = mmc_wait_for_cmd(host, &cmd, 0); if (err) break; /* wait until reset completes */ if (mmc_host_is_spi(host)) { if (!(cmd.resp[0] & R1_SPI_IDLE)) break; } else { if (cmd.resp[0] & MMC_CARD_BUSY) break; } err = -ETIMEDOUT; mmc_delay(10); /* * According to eMMC specification v5.1 section 6.4.3, we * should issue CMD1 repeatedly in the idle state until * the eMMC is ready. Otherwise some eMMC devices seem to enter * the inactive mode after mmc_init_card() issued CMD0 when * the eMMC device is busy. */ if (!ocr && !mmc_host_is_spi(host)) cmd.arg = cmd.resp[0] | BIT(30); } if (rocr && !mmc_host_is_spi(host)) *rocr = cmd.resp[0]; return err; }

mmc_send_op_cond関数が呼ばれたとき、次のようなデバッグメッセージが確認することができる。

[ 1.372548][ T34] mmc0: starting CMD1 arg 00000000 flags 000000e1

MMCの判定は、CMD1 を発行したときの結果を確認することで確認できる。

おわりに

本記事では、カーネル起動時に呼び出される mmc_rescan_try_freq関数について確認した。

この関数では、カード検出ロジックが組み込まれており、カード検出には対応するCMDのレスポンスで判断できる。

変更履歴

- 2024/03/10: 記事公開

参考

- SD規格の概要 | SD Association

- SD規格の概要

- MMC/SDCの使いかた

- MMC/SDC の仕組みの解説

- SDカード

- SDカードのコマンドの解説

- LINUX MMC 子系统分析之六 MMC card添加流程分析_mmc_card-CSDN博客

- 車載外部ストレージ バックナンバー | シミュレーションの世界に引きこもる部屋

- SDカードの仕様の解説

- SDIOについて #sdio - Qiita

- SDIOの仕様の解説